December 8, 2011 report

UIUC team will show can't-tell photo inserts at Siggraph (w/ video)

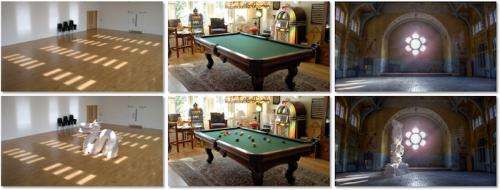

(PhysOrg.com) -- Visitors to this month's Siggraph Asia conference on computer graphics from December 12 to 15 will witness a presentation from a team at the University of Illinois in Urbana Champaign on how to tweak photos by adding in something that was not there before. They will present their study, Rendering Synthetic Objects into Legacy Photographs, which details their approach.

So what? What could possibly be new about this? Their method has more going for it than older techniques used by the Kremlin or budding Photoshop enthusiasts. The team, Kevin Karsch, Varsha Hedau, David Forsyth, Derek Hoiem, can simulate lighting conditions so that the object looks realistic.

Humans can quickly detect photo fraud, maintains Karsch. They can do so in spotting lighting inconsistencies in a doctored photograph.

In contrast, the university team’s method, he says, is successfully confusable even for people who pride themselves in spotting differences.

If you don’t know the perspective, if you don’t know the geometry of an object, then you are just manipulating pixels, he commented, with unconvincing results.

In their computer program, a user is asked to select light sources in the picture. An algorithm recreates the 3-D geometry and lighting of the scene and the artificial object is inserted into its new environment. The program adds shadows and highlights to the object before converting it back to 2-D.

The weakness in existing photo editing programs, they say, is that they simply insert a 2-D object. Karsch, a computer science doctoral student whose advisor is David Forsyth, explains that image editing software that only allows 2-D manipulations does not account for high-level spatial information that is present in a given scene, yet 3-D modeling tools may be complex and tedious for novice users.

The team set out to extract the 3-D scene information from single images, to allow for seamless object insertion, removal, and relocation.

The process involves three phases: luminaire inference, perspective estimation (depth, occlusion, camera parameters), and texture replacement. The team, in their paper, says their method can realistically insert synthetic objects into existing photographs without requiring access to the scene or any additional scene measurements.

“With a single image and a small amount of annotation, our method creates a physical model of the scene that is suitable for realistically rendering synthetic objects with diffuse, specular, and even glowing materials while accounting for lighting interactions between the objects and the scene.”

Potentially useful applications include interior design, where decorators might take a photo of a room and experiment with different furniture and object insertions. Other possibilities include entertainment and gaming.

More information: kevinkarsch.com/publications/sa11.html

© 2011 PhysOrg.com